前言

1.为什么需要线程池?线程池(Therad Pool)一种池化管理线程的思想。线程的频繁创建销毁,线程的调度伴随着较高的性能消耗。线程池就是对线程的统一管理,包括状态的管理,线程的复用,线程的创建,以及对线程最大数量的限制(线程作为计算机的稀缺资源,线程无休止的创建导致线程膨胀,必然会引起过分调度的问题,这样会适得其反)。JDK中实现的线程池,值得去深入理解。使用线程池会带来什么好处:

降低性能的开销:通过复用空闲的线程,减少线程创建带来的性能损耗。

提高响应速度:如果预先开启了空闲线程,那么任务达到时无需等待(线程创建的等待时间)可以立即执行。

统一管理:将线程统一管理,包括状态信息,线程的调度,分配,适当的时机销毁等。

可扩展性:由开发者扩展一些功能,如定时任务等。

ThreadPoolExecutor

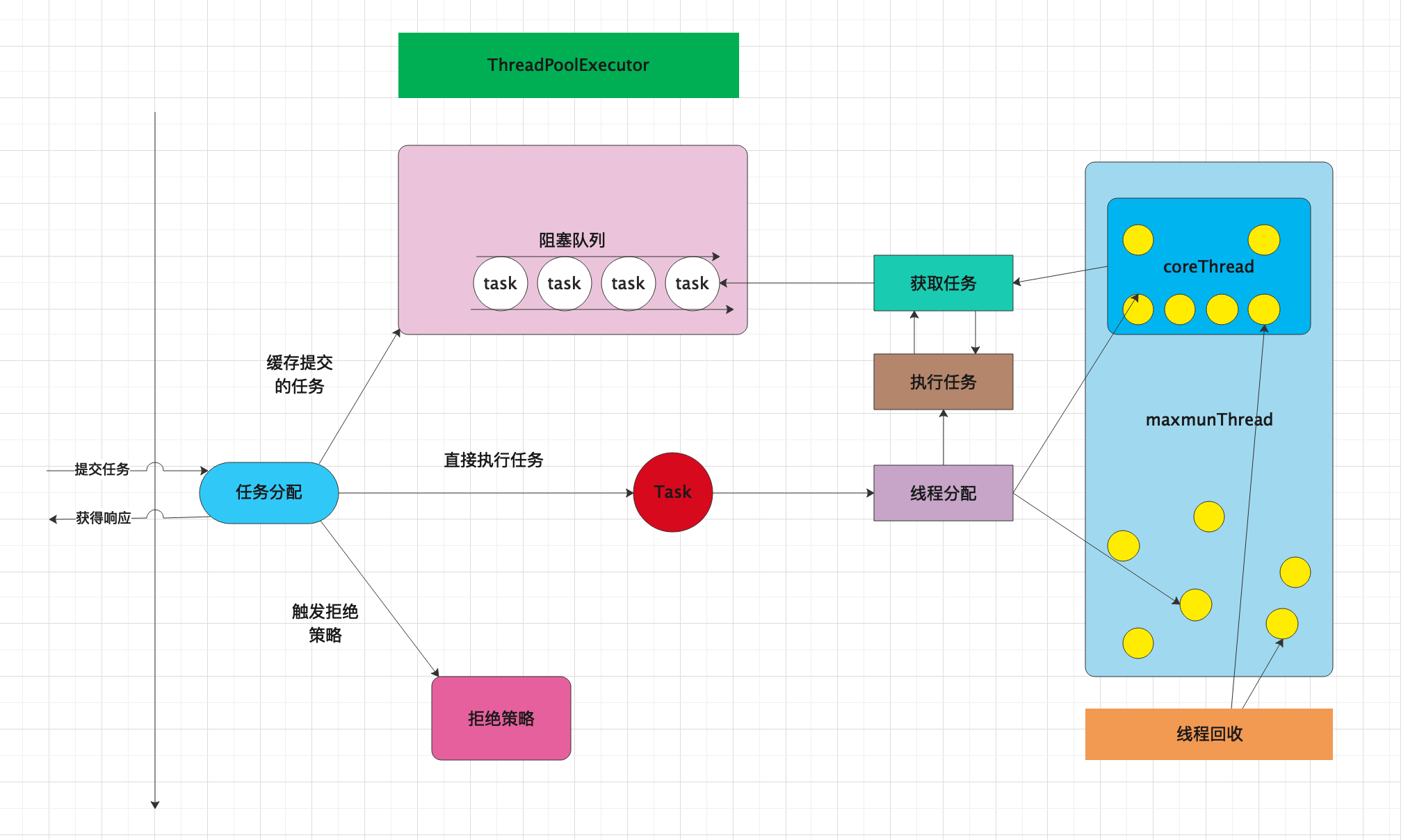

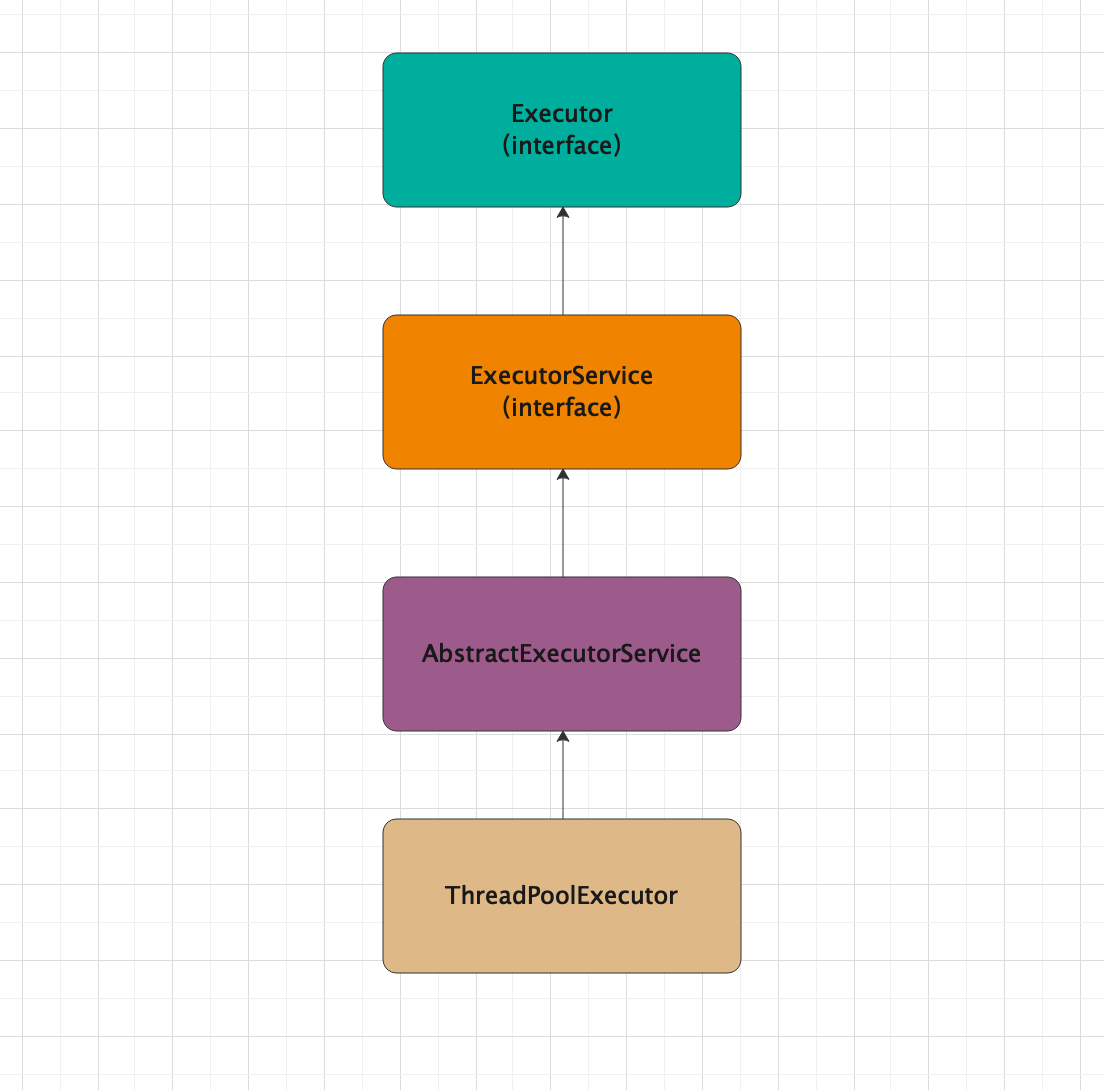

1.作为线程顶层接口的Executor一个核心实现类,平时在开发过程中接触到的比较多,对ThreadPoolExecutor的深入了解有助于对线程池的设计和设计思想有更深的认识。这里主要设计几个重要的类Executor,ExecutorService,AbstractExecutorService,ThreadPoolExecutor。他们之间的关系也是从上到下:

Executor

1.顶层的线程池接口,目的是将提交任务与执行任务(Runnable)实现解耦分离,调用者无需关心内部的具体实现,只需要提供任务(Runnable、),获取响应。1

2

3

4

5

6

7

8

9

10

11

12

13public interface Executor {

/**

* Executes the given command at some time in the future. The command

* may execute in a new thread, in a pooled thread, or in the calling

* thread, at the discretion of the {@code Executor} implementation.

*

* @param command the runnable task

* @throws RejectedExecutionException if this task cannot be

* accepted for execution

* @throws NullPointerException if command is null

*/

void execute(Runnable command);

}

ExecutorService

1.同样是接口,继承自Executor,对其功能做了一些基础性的扩展。如结束任务shutdown、shutdownNow、submit等:1

2

3

4

5

6

7

8

9

10public interface ExecutorService extends Executor {

void shutdown();

List<Runnable> shutdownNow();

boolean isShutdown();

<T> Future<T> submit(Callable<T> task);

//.....

}

AbstractExecutorService

1.上层的抽象类,将执行任务的流程串联,解耦下层,下层只需要关心执行任务的方法:1

2

3public abstract class AbstractExecutorService implements ExecutorService {

//ignore

}

ThreadPoolExecutor

1.抽象类AbstractExecutorService的具体实现类,实现了线程池的初始化操作,管理自身与线程的生命周期,对提交的任务进行统一管理。分析线程池具体需要分析就是ThreadPoolExecutor的具体实现。1

2

3public class ThreadPoolExecutor extends AbstractExecutorService {

//ignore

}

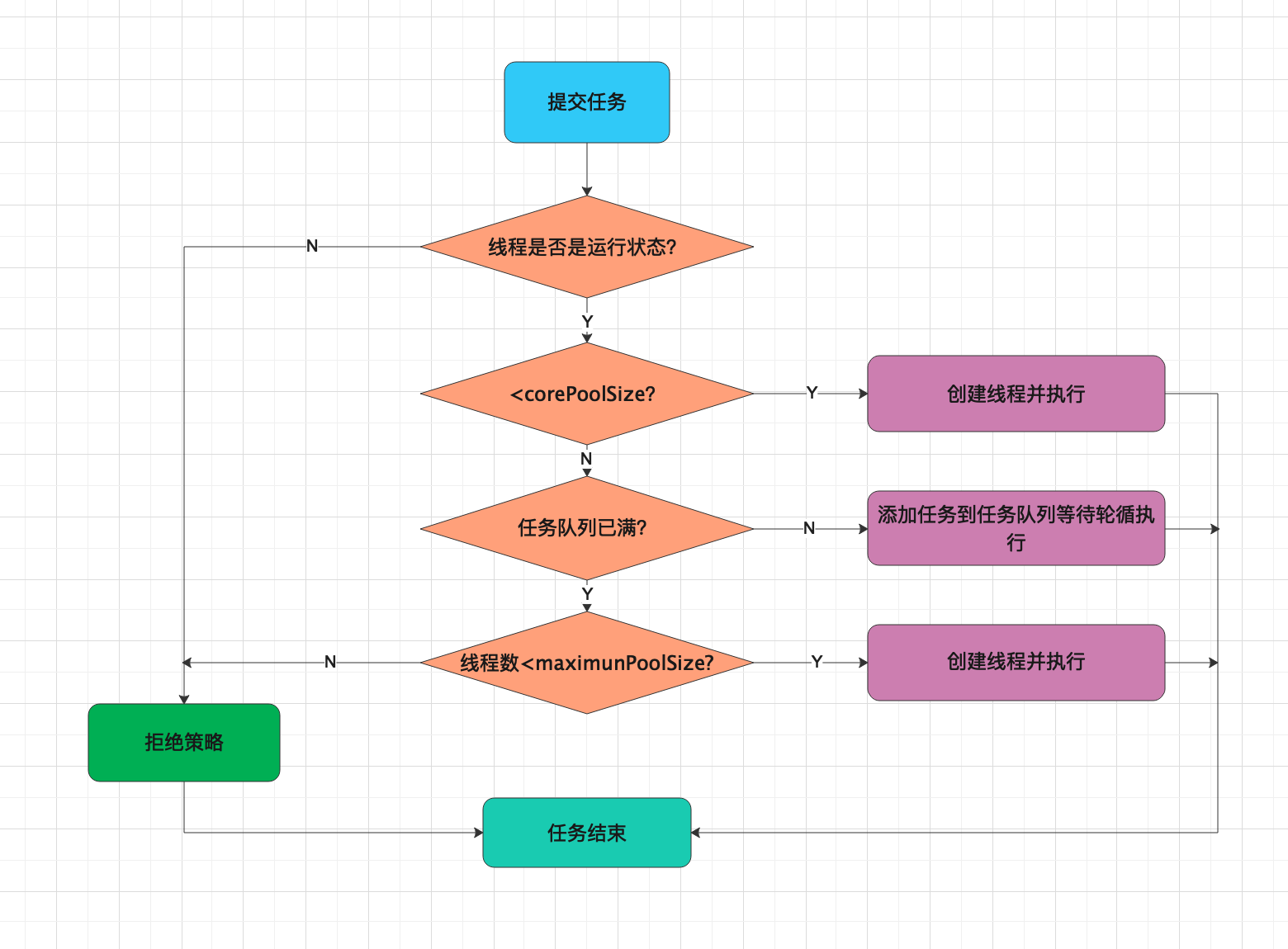

3.从execute()开始,作为用户提交的所有任务的调度入口,当用户提交任务到线程池,通过对线程池内状态的判断,线程数等限制参数来决定不同的执行流程。主要分为几个分支:

workerCount < corePoolSize 则创建一个线程来启动提交的任务

workerCount >= corePoolSize 此时判断任务队列是否已满,如果不满则添加到任务队列

workerCount >= corePoolSize && workerCount < maximumPoolSize 此时判断任务队列是否已满,如果已满,则创建一个线程来启动任务

workerCount >= maximumPoolSize 如果任务队列已满,则执行拒绝策略,而默认的拒绝策略(AbortPolicy)直接抛出异常

ThreadPoolExecutor-execute()

1.任务调度的入口:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67public void execute(Runnable command) {

if (command == null)

throw new NullPointerException();

/*

* Proceed in 3 steps:

*

* 1. If fewer than corePoolSize threads are running, try to

* start a new thread with the given command as its first

* task. The call to addWorker atomically checks runState and

* workerCount, and so prevents false alarms that would add

* threads when it shouldn't, by returning false.

*

* 2. If a task can be successfully queued, then we still need

* to double-check whether we should have added a thread

* (because existing ones died since last checking) or that

* the pool shut down since entry into this method. So we

* recheck state and if necessary roll back the enqueuing if

* stopped, or start a new thread if there are none.

*

* 3. If we cannot queue task, then we try to add a new

* thread. If it fails, we know we are shut down or saturated

* and so reject the task.

*/

int c = ctl.get();

if (workerCountOf(c) < corePoolSize) {

if (addWorker(command, true))

return;

c = ctl.get();

}

if (isRunning(c) && workQueue.offer(command)) {

int recheck = ctl.get();

if (! isRunning(recheck) && remove(command))

reject(command);

else if (workerCountOf(recheck) == 0)

addWorker(null, false);

}

else if (!addWorker(command, false))

reject(command);

}

//对方法稍加更改便于分析

public void execute(Runnable command) {

if (command == null) {

throw new NullPointerException();

}

int c = ctl.get();

//step1 workerCount < corePoolSiz 直接创建线程开启任务

if (workerCountOf(c) < corePoolSize) {

if (addWorker(command, true))

return;

c = ctl.get();

}

//step2 这一步判断了线程池的运行状态,并将任务提交到任务队列(如果添加成功则任务队列未满,添加失败则任务队列已满)

if (isRunning(c) && workQueue.offer(command)) {

int recheck = ctl.get();

if (! isRunning(recheck) && remove(command)) {

reject(command);

//step4 添加任务到任务队列成功后,开启一个新线程

} else if (workerCountOf(recheck) == 0) {

addWorker(null, false);

}

//step3 任务队列已满,addWorker内部判断了(任务队列已满的情况下判断了活动线程数是否小于maximunPoolSize)

//小于maximunPoolSize则开启新线程启动任务

} else if (!addWorker(command, false)) {

//大于maximumPoolSize执行了拒绝策略

reject(command);

}

}

3.任务队列,线程池的另一个关键实现BlockingQueue,作为生产者与消费者模型将任务与线程之间解耦。通过阻塞队列缓存来不及处理的任务,而工作线程不断从阻塞队列中获取任务,如此循环。阻塞队列作为常用的数据结构,当阻塞队列为空时:获取元素的线程会等到有队列有数据在进行后序操作;当阻塞队列已经满时:添加元素的线程会等到队列容量释放。这是典型的生产者消费者模型,生产者不断的生产数据,而消费者不断的获取数据。配合响应的等待机制。那么常用的阻塞队列有几种实现:

ArrayBlockingQueue 数据实现的有界阻塞队列,FIFO,并且支持公平锁与非公平锁

LinkedBlockingQueue 链表实现的有界阻塞队列最大值为Integer.MAX_VALUE,有容量膨胀的风险

PriorityBlockingQueue 优先无界队列,默认自然排序,通过重写compareTo自定义排序规则,但是不能保证同优先级元素的顺序

DelayQueue 实现PriorityBlockingQueue的延迟队列,无界,可以指定时间延迟后获取数据

LinkedBlockingDeque 链表实现双向阻塞队列,head与tail都可以添加移除元素,并发情况下可以降低锁的竞争

SynchronousQueue 不存储元素的阻塞队列每一个put操作必须等待take操作,否则不能添加元素。支持公平与非公平锁。

ThreadPoolExecutor-Worker

1.Worker继承自AbstractQueuedSynchronizer,并实现了Runnable接口,继承AbstractQueuedSynchronizer主要考虑要实现独占锁:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17private final class Worker extends AbstractQueuedSynchronizer implements Runnable {

/** Thread this worker is running in. Null if factory fails. */

final Thread thread;

/** Initial task to run. Possibly null. */

Runnable firstTask;

/**

* Creates with given first task and thread from ThreadFactory.

* @param firstTask the first task (null if none)

*/

Worker(Runnable firstTask) {

setState(-1); // inhibit interrupts until runWorker

this.firstTask = firstTask;

//创建新线程

this.thread = getThreadFactory().newThread(this);

}

}

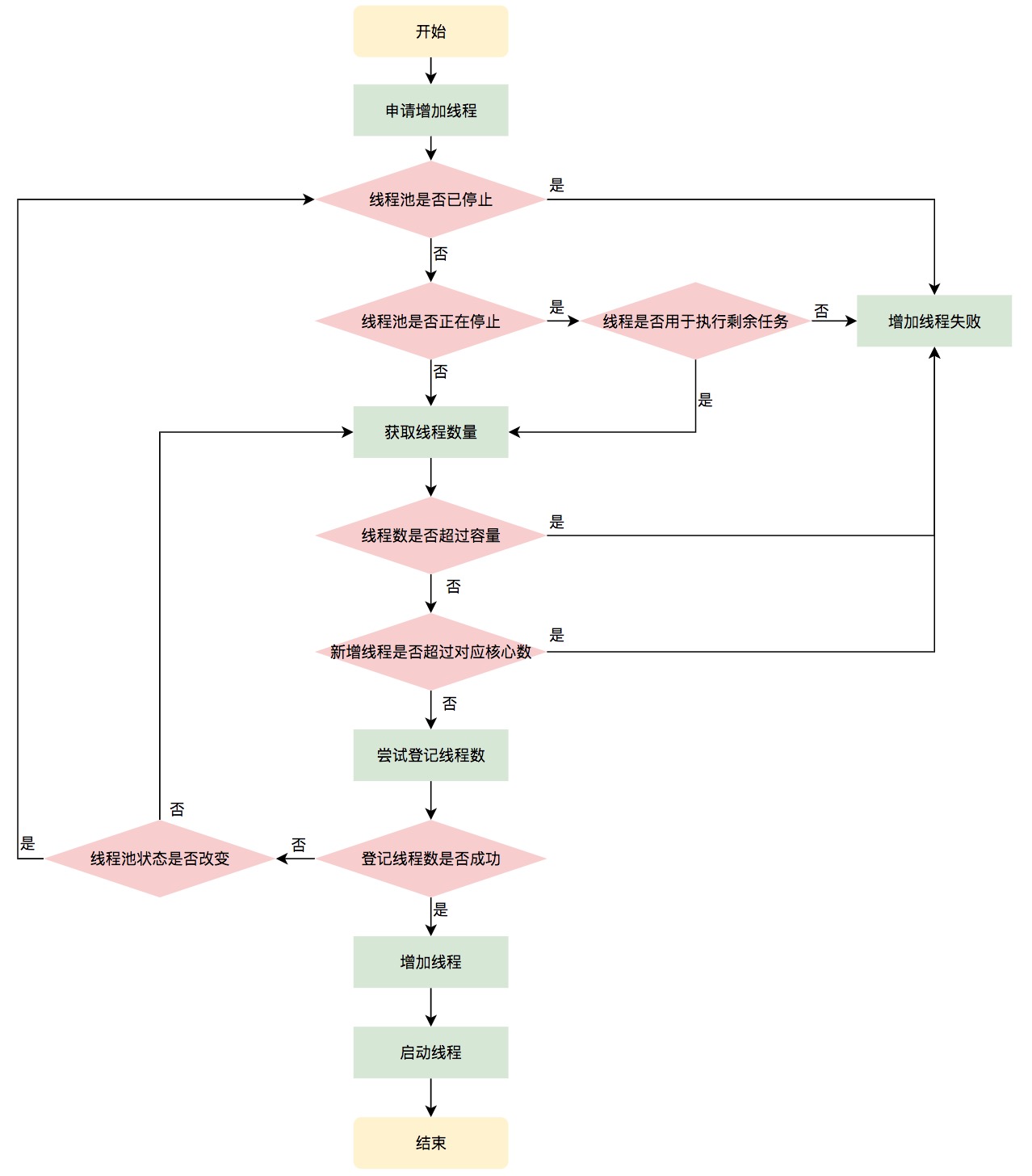

2.Worker内部持有了一个Thread与firstTask,而通过构造方法就是创建新线程的实现。以创建小于corePoolSiz创建工作线程为例分析addWorker方法:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80if (workerCountOf(c) < corePoolSize) {

if (addWorker(command, true))

return;

c = ctl.get();

}

//command 不为空,core为true

private boolean addWorker(Runnable firstTask, boolean core) {

retry:

for (;;) {

//用于后序计算运行的线程数

int c = ctl.get();

//线程运行的状态

int rs = runStateOf(c);

// Check if queue empty only if necessary.

if (rs >= SHUTDOWN &&

! (rs == SHUTDOWN &&

firstTask == null &&

! workQueue.isEmpty()))

return false;

for (;;) {

//计算运行的线程数

int wc = workerCountOf(c);

if (wc >= CAPACITY ||

wc >= (core ? corePoolSize : maximumPoolSize))

return false;

if (compareAndIncrementWorkerCount(c))

break retry;

c = ctl.get(); // Re-read ctl

if (runStateOf(c) != rs)

continue retry;

// else CAS failed due to workerCount change; retry inner loop

}

}

boolean workerStarted = false;

boolean workerAdded = false;

Worker w = null;

try {

//创建了一个新worker,之前提到,通过构造方法创建了一个新的线程

w = new Worker(firstTask);

final Thread t = w.thread;

if (t != null) {

//这个操作是要获取全局锁的

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

// Recheck while holding lock.

// Back out on ThreadFactory failure or if

// shut down before lock acquired.

int rs = runStateOf(ctl.get());

if (rs < SHUTDOWN ||

(rs == SHUTDOWN && firstTask == null)) {

if (t.isAlive()) // precheck that t is startable

throw new IllegalThreadStateException();

//workers基于HashSet,将任务包装成workers存储

workers.add(w);

int s = workers.size();

if (s > largestPoolSize)

largestPoolSize = s;

workerAdded = true;

}

} finally {

mainLock.unlock();

}

if (workerAdded) {

//直接启动任务(worker实现了runnable接口,线程启动,那么就看run方法)

t.start();

workerStarted = true;

}

}

} finally {

if (! workerStarted)

addWorkerFailed(w);

}

return workerStarted;

}

3.run()方法中:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53/** Delegates main run loop to outer runWorker */

public void run() {

runWorker(this);

}

//还是以小于corePoolSize为例

final void runWorker(Worker w) {

Thread wt = Thread.currentThread();

//由于firstTask即为添加的command,不为空

Runnable task = w.firstTask;

w.firstTask = null;

w.unlock(); // allow interrupts

boolean completedAbruptly = true;

try {

//getTask即从阻塞队列中获取任务执行

while (task != null || (task = getTask()) != null) {

w.lock();

// If pool is stopping, ensure thread is interrupted;

// if not, ensure thread is not interrupted. This

// requires a recheck in second case to deal with

// shutdownNow race while clearing interrupt

if ((runStateAtLeast(ctl.get(), STOP) ||

(Thread.interrupted() &&

runStateAtLeast(ctl.get(), STOP))) &&

!wt.isInterrupted())

wt.interrupt();

try {

beforeExecute(wt, task);

Throwable thrown = null;

try {

//前序做一些状态检查,到这里真正执行任务

task.run();

} catch (RuntimeException x) {

thrown = x; throw x;

} catch (Error x) {

thrown = x; throw x;

} catch (Throwable x) {

thrown = x; throw new Error(x);

} finally {

afterExecute(task, thrown);

}

} finally {

task = null;

w.completedTasks++;

w.unlock();

}

}

completedAbruptly = false;

} finally {

//当获取不到任务时主动回收自身

processWorkerExit(w, completedAbruptly);

}

}

为什么不推荐使用Executors创建线程池?

1.在Java的并发包下,实现了Executors类,这个类的主要作用就是提供了四种线程池的创建方式(工厂模式),但是底层的实现还是基于ThreadPoolExecutor,现在看看到底为什么不推荐使用这种创建方式来创建线程池。

newFixedThreadPool

1.对应源码中的构造方法:1

2

3

4

5

6

7

8

9

10

11创建一个线程大小固定的线程池

public static ExecutorService newFixedThreadPool(int nThreads) {

return new ThreadPoolExecutor(nThreads, nThreads,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>());

}

对应到ThreadPoolExecutor,也即corePoolSize = maximumPoolSize

但是使用的是LinkedBlockingQueue,经过之前的分析,显然这个基于链表的阻塞队列

线程缓存的最大值为MAX_VALUE,显然如果任务堆积存在OOM的风险。

同时使用的是默认的拒绝策略,直接抛出异常,不支持拒绝策略的扩展。

newSingleThreadExecutor

1.创建一个单线程的线程池:

1

2

3

4

5

6

7

8

9public static ExecutorService newSingleThreadExecutor() {

return new FinalizableDelegatedExecutorService

(new ThreadPoolExecutor(1, 1,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>()));

}

用一个线程执行任务,相当于串行,也就是可以保证任务按照顺序执行。当一个任务依赖于上一个任务的情景可以使用这个。

缺点也是一样的,容易造成任务积压,不时候高并发下操作。

newCachedThreadPool

1.可缓存的线程池:1

2

3

4

5

6

7public static ExecutorService newCachedThreadPool() {

return new ThreadPoolExecutor(0, Integer.MAX_VALUE,

60L, TimeUnit.SECONDS,

new SynchronousQueue<Runnable>());

}

SynchronousQueue由于自身的特点,当新任务到来是创建新的线程,如果有空闲线程则复用,超时时间为60S

可以看到的是,虽然线程是弹性创建,但是线程的最大值为Integer.MAX_VALUE,同样存在内存OOM的风险。

newScheduledThreadPool

1.定时任务线程池:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16public static ScheduledExecutorService newScheduledThreadPool(int corePoolSize) {

return new ScheduledThreadPoolExecutor(corePoolSize);

}

public ScheduledThreadPoolExecutor(int corePoolSize) {

super(corePoolSize, Integer.MAX_VALUE, 0, NANOSECONDS,

new DelayedWorkQueue());

}

public ScheduledThreadPoolExecutor(int corePoolSize,

ThreadFactory threadFactory) {

super(corePoolSize, Integer.MAX_VALUE, 0, NANOSECONDS,

new DelayedWorkQueue(), threadFactory);

}

虽然指定了corePoolSize的大小,但是maximunPoolSize为Integer.MAX_VALUE,网上很多说创建固定大小的线程池。

WTF?这是固定大小,应该抄的同一家吧。缺点同样是存在OOM的风险,通过DelayedWorkQueue延迟队列实现任务定时执行。

2.可以发现几种默认的线程池都是使用ThreadPoolExecutor默认的拒绝策略,也即不支持扩展自身的拒绝策略。而ThreadPoolExecutor的拒绝策略主要是四种:

ThreadPoolExecutor.AbortPolicy 默认的拒绝策略,但任务无法添加时直接抛出异常RejectedExecutionException,通知到上层

ThreadPoolExecutor.DiscardPolicy 丢弃任务,但是不抛出异常

ThreadPoolExecutor.DiscardOldestPolicy 丢弃队列最前的任务,然后重新提交被拒绝的任务。

ThreadPoolExecutor.CallerRunsPolicy 由调用者所在的线程处理此次任务

推荐创建线程池的方法

1.基于ThreadPoolExecutor合理配置,包括核心线程数,最大线程数,最大缓存队列数,当然还包括自己的拒绝策略,通过集合业务实现定制化。通过分析Executors发现底层的实现还是基于ThreadPoolExecutor,并存在很多风险,作为开发者在了解ThreadPoolExecutor特性后完全可以实现切合自身业务的线程池,而Executors仅仅作为参考。